⚡︎ Thunder Compute ⊹ GPU Cloud to enable AI workloads

Designed a platform for developers to host and borrow GPU compute power. Backed by YC.

Role: Product Designer

Team: 2 Founders

Stage: Pre-funding

Outcome: Helped the founders secure 700k seed funding from YC and angel investors to build Thunder full-time

Timeline: 6 Months

Thunder Compute was founded in 2023 as a GPU share service. Developers use GPUs to perform complex calculations that train AI. Thunder aims to make GPU access more accessible to developers, researchers and smaller companies.

⚡︎ thunder explain --impacts

> I established Thunder’s first product framework.

I designed the initial MVP for Thunder Compute. The founders built my designs and received $500k initial funding from YC, one of the best early-stage startup accelerators and investors in the world.

Role

- Product designer

- 6 months

Team

- 1 designer

- 2 founders

Results

- Service frameworks

- User research

- Competitor analysis

- Prototyping

- Design delivery

What I delivered

⚡︎ thunder snapshot --prototypes

> See Thunder in action…

⚡︎ thunder load --logos

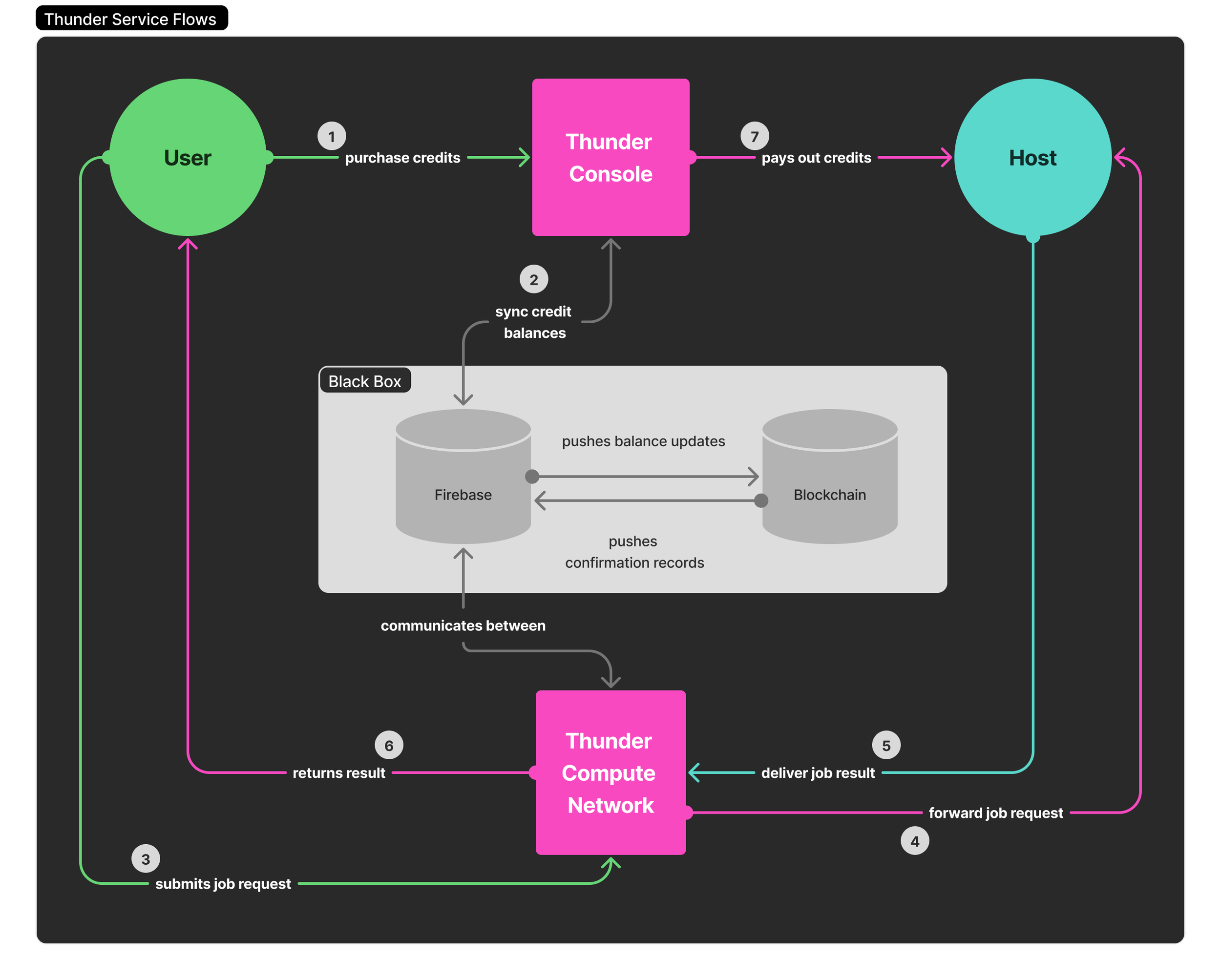

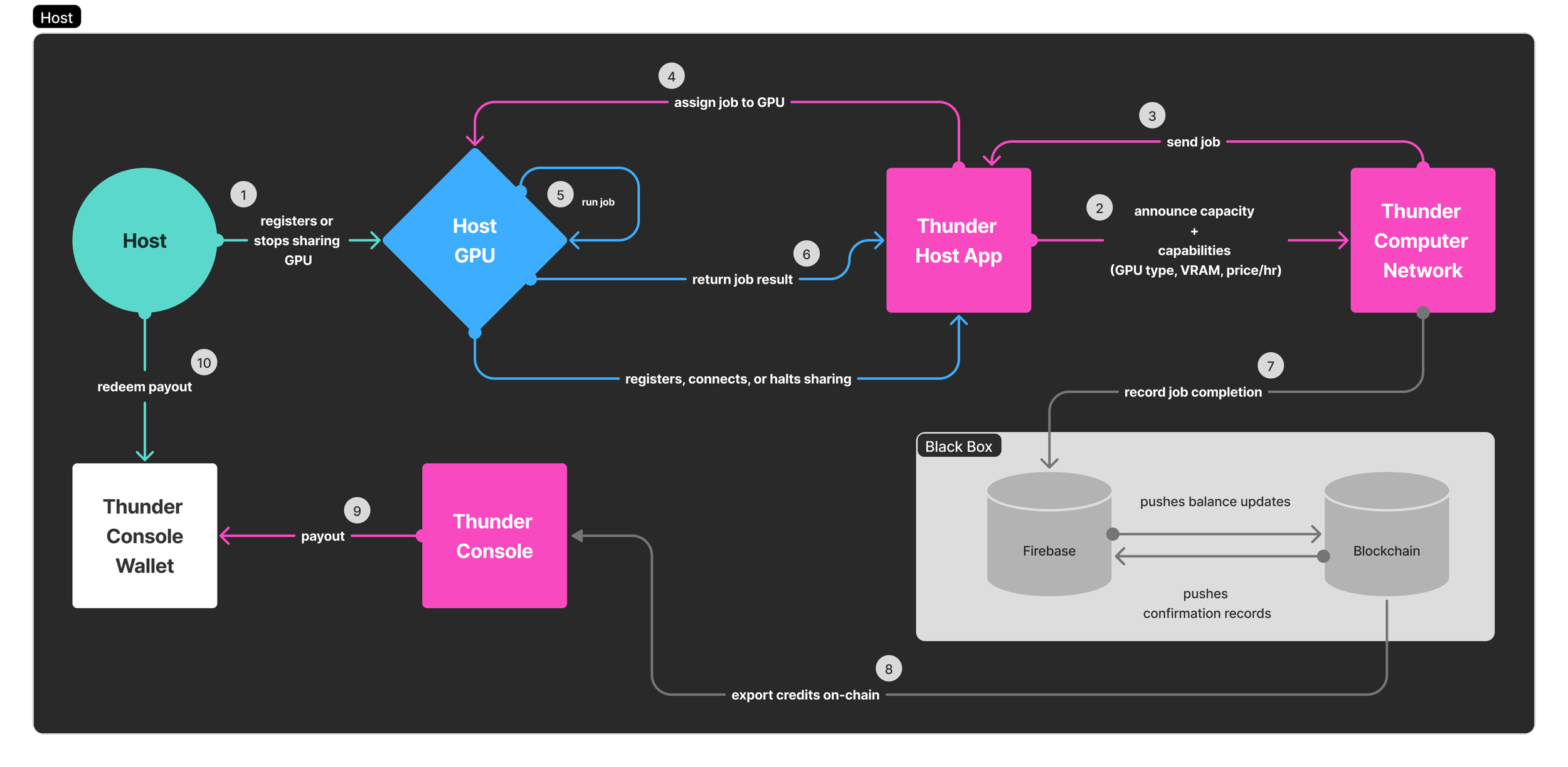

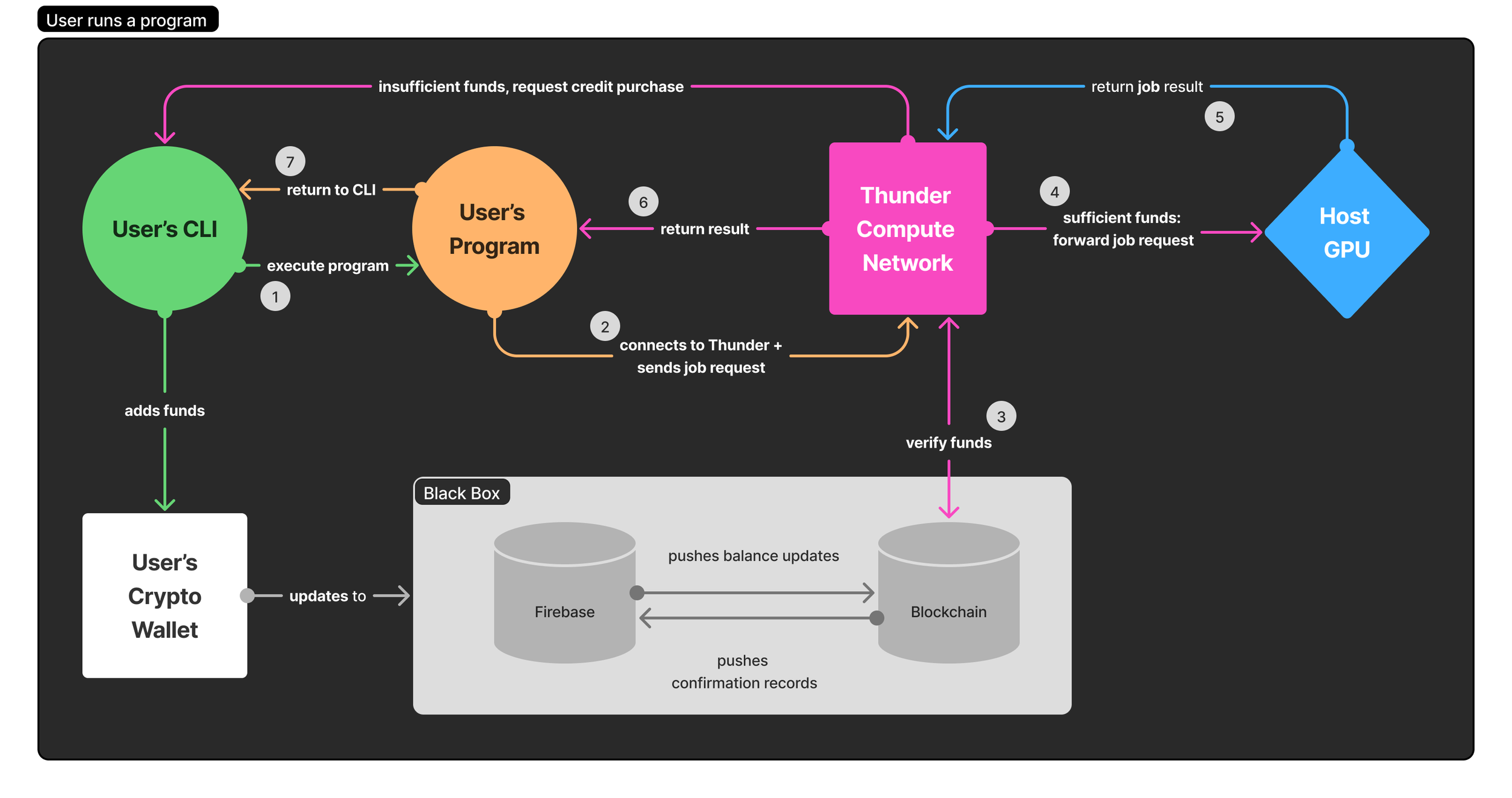

⚡︎ thunder draw --service_diagrams

> I designed Thunder’s service models.

⚡︎ thunder remember --early mission

> Connecting developers with idle GPUs (worth billions and just sitting in people’s homes) to support AI advancements.

We first encountered the problem of GPU scarcity while sharing compute resources at his research lab at Georgia Tech.

While working on GPU-heavy workloads, Brian ran into a problem many researchers face: GPUs were too expensive, unavailable when needed, or locked up by institutional bottlenecks.

At least his team had access to dedicated GPU’s. That’s not the case for everyone in this space.

Anywhere from 10-15 researchers were scheduling 8 GPUs via an excel spreadsheets, and the demand was high. If a program ran off schedule, the whole schedule would be impacted.

⚡︎ thunder find --opportunity

> Developers are paying for the cost of scarcity.

Currently, individual developers, small teams, and startups, are being forced to pay hundreds of thousands of dollars up front for GPU capacity they may only partially use. It’s an unsustainable cost barrier.

We gathered informal feedback from students and researchers about GPU accessibility, payment expectations, and trust barriers.

> Grad students and PhD researchers in ML/AI loved the idea of “renting a GPU quickly”, but didn’t want surprises in billing or availability.

> Independent developers and early-stage founders were price-sensitive and hateful of a heavy onboarding experience.

> Small startups wanted to explore the computing space but unable to afford AWS-scale pricing.

⚡︎ thunder ask --what if

> We could arrange a crowdsourced GPU hosting/borrowing service?

This approach allows users to partake in the reservation model that cloud providers require, but dramatically lowers the effective cost of short bursts of compute. Users can pay only a fraction of today’s prices while still getting the performance they need, and GPU owners with idle machines could earn income by renting out their hardware online.

Thunder looked to Helium’s decentralized wireless network as inspiration for its payment model. Helium enables individuals to contribute their 5G hotspots and be rewarded in a transparent, tokenized way.

Thunder wanted to mirror this system: GPU hosts could be rewarded for contributing compute, while borrowers could pay using a simplified, blockchain-backed mechanism that ensured trust and minimized fraud.

⚡︎ thunder peek --competitors

> I took a look at similar competitor offerings.

Through initial analysis, we began to map our initial market positioning. Like Vast, we wanted to achieve a decentralized Hybrid structure. We leveraged crowdsourced GPUs while managing them through a service layer. Vast’s peer-to-peer approach also inspired Thunder, but we aimed to layer in stronger safeguards to build customer feedback centered platform. We initially wanted to position to use crypto, seeing the success of competitors such as Render. At the time, blockchain-based contracts were seen as a way to create transparent, automated payments between strangers. Lastly, we wanted Thunder to feel quick and familiar, and not bogged down by AWS’s enterprise setup or Render’s crypto hurdles.

⚡︎ thunder research --trust

> Designing trust into the service

I found that unlike a centralized cloud service, a peer-led marketplace lacks a built-in layer of trust. A peer-to-peer model raises key risks. Renters might pay but never receive access, hosts might provide resources without being compensated, and bad actors could misuse another person’s GPU.

Without a centralized authority, certain risks were imminent:

Risk 1: Minimal guarantees on delivery or payments

A renter might pay but never actually receive working GPU access. Or, a host might provide GPU time but never get paid.

Risk 2: Identity risk and gaps in accountability

Users can be anonymous, making it easier to disappear after fraud or misuse. And without oversight, bad actors could run malicious workloads (e.g., crypto mining, spam, hacking) on someone else’s hardware.

To embed trust into the system, I removed direct host–user interaction and designed a network-mediated model. This design created a subconscious layer of accountability. The network itself became a centralized source of truth, while preserving the flexibility and affordability of peer-to-peer GPU sharing.

Pooled resources for abstracted access

Hosts contributed GPUs into a shared network. Then, users borrowed GPU time from the network without ever knowing which host’s hardware they were on. By anonymizing the hosts and user relationship, both sides gained protection from fraud, misuse, or targeted exploitation.

Duplicating workloads to maintain quality check

Workloads were distributed across multiple GPUs, ensuring reliable outputs even if one underperformed. This also performed a check against malicious hosts pretending to have a GPU to make crypto.

Trust ratings on host hardware to incentivize quality host performance.

By continually grading host performance, we could learn more about what the ideal host looks like in terms of connectivity, compute speed and other analytics. This would help us refine our system and also help users trust the GPU pool more.

⚡︎ thunder surface --insights

> Without convenient payment methods, users were reluctant to try Thunder

Helium’s crypto model shows how individuals can passively contribute networks in exchange for crypto. Thunder envisioned something similar, with hosts donating GPU compute and earning tokens. But for those renting GPU’s, consistency and ease of use often outweighed the cost. Many chose more expensive providers simply because they offered straightforward payments and reliable compute access. This highlighted a key adoption barrier:

Requiring users to set up a crypto wallet created friction that overshadowed Thunder’s extremely competitive pricing.

My early user flows surfaced this risk early, and the team was able to pivot away from crypto as the sole payment method early on.

⚡︎ thunder write --reflections

> A shift in perspective

Thunder taught me that early-stage startup design isn’t just about making wireframes or diagrams. It’s also about shaping trust, strategy, and credibility at the very beginning of a company’s story.

Thunder was just an idea when I joined, so my work became the team’s first coherent framework. Through my service design and research, I helped the founders refine their vision and set them up for success.

Looking ahead, I also explored future product avenues with the founders to strengthen investor pitches and open doors for growth:

Optimizing data centers for higher GPU efficiency.

Serving corporate clients with more predictable, scalable and cheaper compute than typical cloud providers.

Helping individual hosts optimize their own GPU performance.

Supporting private rentals to specific users or teams to optimize their own resources.

Expanding to other niches such as rendering workloads, to attract customers such as Render Network’s.

Although Thunder’s services have shifted as they focus on building their core infrastructure and respond to customer feedback, the baseline flows I designed have mostly remained intact. As the company matured, my work was handed off to an external design team.

Through this work, I learned that the real value of design at the founder stage lies in instilling confidence in both users and investors. My early designs gave Thunder a foundation to scale upon.